The Discrete Fourier Transform: Most Important Algorithm Ever?

Apr 16, 2024The issue we're going to delve into today is best understood through the lens of music. Suppose we have three notes that are played together as a chord. A natural way to represent this chord is through a graph of the amplitude of the sound signal over time. but in a practical sense, a representation that is much more useful when working with audio processing is just the underlying notes that make up the chord, each chord is a different frequency, as having granularity over which frequencies are present in the original audio It allows you to unlock many powerful tools We want an especially useful tool in the world of sound engineering is an equalizer An equalizer allows you to reduce specific frequencies giving a new signal with a different sound profile, conversely, we can also enhance the frequencies that We would like to highlight and the equalizer is just one of the many tools that rely on a frequency representation of a signal that you and I are going to talk about today is a small but essential piece of how this fundamental idea of representing a signal with their frequencies.

The core concept involves arguably one of the

most

important

algorithm

s in modern technology thediscrete

Fouriertransform

or DFTmost

people learning about DFT for the first time initially find it difficult to understand it is a complex topic that is difficult to teach The goal of today's video is to try to see if we can reinvent the clever

ideas behind adiscrete

Fouriertransform

from first principles to how we will naturally interact with some of the fundamental concepts in digital signal processing. Abroad, the issue of signals is discussed. Most mathematical representations are modeled in a continuous-time environment.The signal could have a value for any real number time input, but in a practical sense no computing machine can represent signals in this way, so naturally what we have to do is sample points from the signal. The most common sampling scheme is to uniformly sample these signals. to get a sequence of points that represents the original continuous signal Nuances involved in sampling continuous signals here's a fun thought experiment let's say I give you a cosine wave with a frequency of 7 cycles per second or seven Hertz how many points do you think we need to obtain accurately? capture the signal, think about it, it's not an easy question by any means, let's start by ruling out possibilities.

Can we get away with representing the signal with seven evenly sampled points? Well, one way to quickly look at the problem here is if our sampling scheme happens unfortunately. Sampling each peak of this wave, the subsequent signal is seen as a constant signal. This, by the way, is commonly called aliasing. If we sample too few points, there are many possible signals that could produce the same samples we get. Okay, so one way to think We probably need to find a way to sample both the peaks and the valleys, so is there a way we can get away with sampling 14 points instead of seven?

Interestingly, there is a way to even break 14 points if you sample evenly. Since you again get a constant signal, it turns out that the correct answer for this question is 15 points. If you display 15 points uniformly, you are guaranteed to accurately represent the 7 Hertz signal. This is an example of a fairly famous theorem. In signal processing called the Shannon Nyquist sampling theorem, there is a lot more material here that I am intentionally overlooking, but the

important

thing I want you to remember is that for any signal of frequency f we strictly need to have more than twice that frequency F sampled points to accurately represent the signal Another equivalent representation is that given n samples of a signal, the highest possible frequency that could exist within the signal is strictly less than n divided by 2.The highest frequencies are not guaranteed to be capture accurately. It will be important later, so keep this fact in mind. A good example of this in practice is that most audio sources have a sampling rate of at least 44.1 kilohertz and the reason is that the human ear generally cannot pick up frequencies above 20 odd kilohertz, these Samples taken from the original signal give us a clear understanding of how the signal changes over time. Now what we show with the equalizers is another representation of the signal, but in terms of their frequencies fundamentally, these two representations give us exactly the same signal.

They are just two different ways of communicating that information. What this frequency representation tells us is that this particular signal is composed of three different waves of frequency 2, 5 and 10 Hertz. The idea of this frequency representation is that if we take these three waves we scale them by their relative strength and add them together we will obtain the original signal or idea of what is called a frequency domain representation. It is extraordinarily important that we understand that all DFT involves is a conversion between a time domain representation to a frequency domain representation, what

ever

it is we want to understand.How we might rediscover a complex idea It really helps to have an understanding of how we would like it to behave. For some simple examples, suppose we have a cosine wave of frequency at Hertz. Ideally, what we would like the frequency domain representation of said signal to be. B is a peak at frequency 2 and 0 for all other frequencies, so in the context of the simplified example we are looking for some operation that produces a high value for an input frequency of 2 and 0 for all other frequencies, so , what does that mean? is the operation we are looking for is fundamentally a measure of similarity when the frequencies match we should get a high similarity score but when they have different frequencies we ideally want to get a similarity with b0 given a sequence of input points of a timing signal .

Basically, we can represent the sequence as a vector. Now, one of the most common ways to represent similarity within the context of vectors is the notion of a DOT product, so let's begin the analysis by seeing how we can properly construct a similarity measure for our original time. domain signal and cosine waves of different frequencies to properly construct a DOT product we need a set of samples of the cosine waves with which we want to compare. As a first attempt, it seems reasonable to sample the cosine wave at the same sampling frequency as the original signal and This works fine when the original signal and the cosine wave match one to one as it does here in this simplified example, but what about other frequency cosine waves?

Theoretically there are infinitely many cosine waves we could compare with in this time segment and we can't evaluate them all, so how do we go about choosing a set of frequencies to compare? To be clear, there are inherently two frequencies involved, one is the frequency of the original cosine wave and the other is what we will call formally. an analysis frequency for any analysis frequency F which we will currently define as a cosine wave with amplitude 1 and frequency f-hat, so when selecting the frequencies we want to compare, remember a key property we are looking for in our representation in the frequency domain.

We want all frequencies other than the intrinsic frequency to have zero contribution, so let's see what happens when we take the dot product of this cosine wave with other frequency cosine waves. Here we will slowly adjust the scan frequency and see what happens to our similarity. One thing to note here is that if you take any random cosine wave of some real number frequency, the contribution is actually almost always non-zero, but there is a specific set of frequencies where things just cancel out perfectly and we end up with a contribution. from zero as we wish, the interesting thing about this particular example is that when our analysis frequencies are defined so that there are an integer number of cycles within the time range, as long as the similarity measure seems to always end up being zero, this seems Something promising, so let's dig in. going a little deeper into this behavior, so far we've been pretty hand waved without addressing what we're looking for in the DFT to go one level further, let's formalize things a little more carefully right now we have a time domain signal that, As mentioned it can be represented as a vector of sampled points that we want to define some transformation that takes this signal in the time domain and gives us a frequency based representation where a certain set of frequencies is assigned a value that represents a contribution of that frequency to the original. signal we already stated that for a simple cosine wave with frequency F, how we ideally want this transform to behave is to have a high value for that frequency F and zeros for all other frequencies.

We saw some promising signs of this behavior when comparing our cosine. wave at different analysis frequencies currently we have a similarity measure that is defined in the dot product between our original signal and the sample points of an analysis frequency and what we are looking for is to perform this operation on a set of analysis frequencies, which type of transformation operation expresses this very well, what we are essentially trying to define is a vector product matrix by representing samples of different cosine wave analysis frequencies as rows of this matrix, we obtain a compact representation of our similarity measure of the DOT product applied to all analysis frequencies that Ideally, what we are looking for are three fundamental requirements that must be satisfied in this specific example of a single frequency cosine wave.

Firstly, we want that when the scan frequency is exactly the same as the frequency of our original signals, the respective values in the frequency domain should be greater than zero and two, when we compare with scan frequencies that have some other value. than the frequency of the original signal, we should get a corresponding dot product of 0 to mean that there was no contribution from those frequencies to the signal, and finally, we would like this transformation. To be reversible given a frequency representation of the signal, we would like to exactly reconstruct the original signal in the time domain.

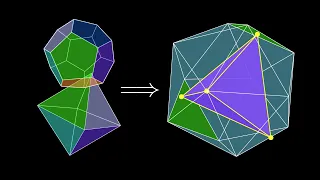

Now it turns out that these requirements can be translated into fundamental mathematical properties of matrices starting from the bottom to make this operation reversible, this mysterious Matrix must be invertible and to fit these two constraints, what we are looking for is an orthogonal matrix, for so we immediately know that our final definition of the Fourier transform must have the same number of analysis frequencies as the number of samples in the signal. otherwise there is no way the Matrix can be invertible. Let's see if our previous definition of analysis frequencies for cosine waves fits the criteria we are looking for, as we have seen above, the nice property of these sample points of analysis frequencies for cosine waves is that when the cosine waves had the same integer frequency, the dot product is not zero and when they have different integer frequencies, they have a DOT product of exactly zero or at least that's what I implied here, some of you may have noticed that this is actually not exact, it turns out that there are particular pairs of analysis frequencies that have a non-zero dot product, even when the underlying frequencies are different; in fact, if we calculate each combination of dot products between these analysis frequencies, we see an interesting pattern for each non-zero frequency. -zero analysis frequency there is exactly another frequency that results in a positive dot product.

What's going on here, well, if we break it down, we see that the problem is that, although these particular frequencies have different underlying cosine waves, the samples themselves are actually identical. is essentially a byproduct of Shannon Nyquist's sampling theorem, since we are only sampling eight points, any frequency four or higher cannot be accurately represented with eight samples, so we end up with an overlap. One thing you may notice here is that a subset of this Matrix is very well defined and one way to overcome this sampling problem is to consider only the first half of any frequency spectrum.

When we use this transformation on any cosine wave of non-zero frequency, there will always be redundant information as a result. Due to the nature of our current definition of the transformation, the biggest problem here is that several rows of our analysis frequency matrix are identical, which in effect means that a matrix is not only not orthogonal but also notIt is investable. Unfortunately, this is a serious problem. Investibility is an essential requirement for us to reverse our transformation as a result. Miner spoiler here, this isn't the actual Fourier transform, but sometimes from an engineering perspective it's that we know it's wrong and we see where things break down before we dive into how the frequency breaks down via DFT actually. works.

I want to pause briefly to talk about some similar types of activities that occur every day. In some countries it is legal to monitor your Internet traffic and create an online profile of you, basically assimilating. a signal that is a function of web activity and breaking it down into a fairly precise advertising profile that perhaps can also be sold and used maliciously when browsing online. This is not something any of us would like to think about, which is why I recommend sponsor nordvpn. of the current video, nordvpn prevents third parties from intrusive tracking and creating such a profile.

It's also great in areas where ISPs may intentionally slow your connection. In addition to these essential features, one of my favorite uses for NordVPN is when I travel, NordVPN can give you a fake location in any of the 60 countries it allows you to use. Get access to shows and entertainment you normally can't get. The ability to connect six devices simultaneously allows you to take these features wherever you go. Nordvpn has been in this business for years, making it easier than ever to set up, they have earned a reputation that values trust and cybersecurity. If you are interested, visit reduceable nordvpn.com to get the two-year plan with a exclusive offer plus a free bonus month.

You're risk-free with nordvpn's 30-day money-back guarantee. Thanks to nordvpns for sponsoring this video. Going back to our previous question we know that our original transform has a problem with invertibility and to see where things go wrong, let's look at what happens when we try to apply this fake Fourier transform to a variety of signals of different definitions, let's think about some test cases to establish some ground. rules surrounding our assumptions, all transformations from now on will be taken from 16 points of the original signal and we simply display half the frequency spectrum, this allows us to at least capture frequencies up to seven according to the Shannon Nyquist theorem, let's begin With what we have been doing so far with cosine waves, the first case is that we want any change in amplitude to be appropriately reflected in the strength of the respective frequency in the frequency domain and when we gradually change the amplitude of the original signal , we actually see this working as the expected amplitude changes appear to be adequately captured by our fake Fourier transform.

The next test case is to change the frequency of the cosine wave. What we expect to happen is for our Peak test transform to update correspondingly to the new frequency. from frequency changes and indeed that is the case, the peak moves in the frequency domain as the frequency of the original signal changes, let's see what happens when we change the y-intercept of our cosine waves, when We shift these waves up, we are essentially adding a zero frequency signal and our fake Fourier transform encapsulates that information into the contribution to the zero analysis frequency. Alright, so far nothing has clearly broken, so let's make our test cases a little more complex.

Here is a combination of different cosine waves and let's see. If our test transform adequately extracts all frequency components, we want an ideal Fourier transform to extract exactly these frequencies. When we pass the sample point of the signal to our test transform, we actually get those frequencies, the fundamental reason why What this works is that our transform is just a matrix multiplication so it's linear, what that means is that the output of this transformation on this aggregated signal is exactly the same as if we performed the transformation on the individual signal separately and then we add the outputs until now we have focused on cosine waves.

Let's now see what happens when we use sine waves. What we expect is that if we pass a sine wave of some frequency, we should ideally show that same frequency in our frequency spectrum, so let's try it, what we get seems a bit surprising. all the frequency components are zero and if we investigate a little further it turns out that if we take the dot product between this cosine wave analysis frequency and the matching sine wave frequency, the dot product is in fact zero and interestingly this is true for any frequency. Sine waves and cosine waves of matching frequencies are orthogonal to each other.

I would test the transformation as it is currently built. I won't be able to extract frequency information from the sine waves to understand what is happening. We have to go back to our test cases. We tested the changing amplitudes. We test shifting frequencies and we also test shifting signals up and down, but another component of the signals we can shift is phase. Remember that what we expect to happen when we change the phase is that the intensity of our frequency component should remain the same after all. the same frequency still present in the original signal was simply shifted through time, but what happens with our test transformation is that unfortunately the intensity of the frequency is affected by the phase.

The sine wave example is just a specific case when the phase is offset by 90 degrees, so fundamentally this is where our false Fourier transform breaks. Let's now see how we can solve it well in the case that we originally had a sine wave. An ingenious way to solve this problem is to use analysis frequencies that correspond to sine waves instead of cosine waves. With the sine wave version of the transform, each frequency sine wave appropriately gets a frequency contribution after passing through our transform. test, but as you might expect, this breaks our original cosine wave comparisons where we now end up with the same problem, so there seems to be some kind of balance that needs to be achieved here, but how exactly can we get it right?

Let's play with these separate sine and cosine wave analysis frequencies and see what happens given a signal, modify the phase of the signal and capture values of both cosines. and sine versions of our fake transforms, what is happening here is that we are capturing the dot product of the matching analysis frequency for our original signal for both versions of these transforms, as you can see when the underlying wave is a cosine wave, the Cosine component dominates When the phase is changed to a sine wave, the sine component takes control. One thing that's interesting here is that these values are never simultaneously zero and there's also kind of a funny dance between them.

One idea that seems interesting is to see what happens when we plot these values on a 2D coordinate plane and what is quite interesting is that we see these coordinates plot a circle as we change the phase of the original signal. How cool is that, but the question remains how do we put this together in a way that solves. our phase problem as a reminder, the original problem we are trying to solve here is that when we were changing the phase of our signal, we got different frequency contributions from the test transform or ideally we want our frequency contribution to stay the same .

The same regardless of the phase. Can we use a feature of these circling coordinates to give us a value that remains the same regardless of phase? As some of you may have already guessed, it is the radius or, more specifically, the magnitude of these coordinates. Let's put this together into a new proposed solution. Originally we had a matrix containing cosine wave analysis frequencies. Now we will add a similar matrix to the equation, but for sine waves of different analysis frequencies, the big idea now is that we can apply the Cosine Wave Analysis Frequency Matrix to give us the x coordinates and then apply the Cosine Analysis Frequency Matrix sine wave to give us the coordinates And the final frequency domain representation will join these two coordinates through the magnitude of these coordinates Here is the final combined version of the transformation applied To our previous test cases, by the way, this combined version of Sine and cosine analysis frequencies is what most people mean when they talk about frequency domain representations of the true discrete Fourier transform, but DFT uses one more trick to simplify things after all generating these two matrices performing this transformation twice and then extracting the magnitude is a bit complicated.

The key is that instead of keeping track of two arrays that have cosine and sine wave sample points, we can jointly represent the two coordinates as sample points of a unit circle in a single array, so let's see how this works really for a signal with 10 sampled points. Breaking down an example is one of the best ways to see the underlying pattern for zero frequency analysis. All points are simply the same coordinate. It is a zero frequency signal. so this is what is expected for the analysis frequency. Things get more interesting if we plot the corresponding points of our cosine and sine samples, we get the next 10 points and intuitively what is happening here is that we are taking a single cycle through the unit circle and sampling points uniformly until we get 10 points for analysis frequency 2, the points of the sine and cosine waves align as follows and once again a good way to interpret this is that we are regularly sampling points from the unit circle, but now the key difference is Let's do it in two cycles, let's do a 3 frequency analysis to make sure the same pattern is maintained.

We plot the sine and cosine points as before and what we see again is that they are points uniformly sampled from the unit circle with an underlying frequency of three cycles. all analysis frequencies the key interpretation here is that each analysis frequency samples points from the unit circle uniformly with an angular frequency that matches the analysis frequency the final part of the simplification to true DFT is when we analyze the points at a unit circle It is quite convenient to represent the coordinates as complex numbers. The real components of these complex numbers correspond to points on the original cosine wave, while the imaginary components map to points on the original sine wave.

In practice, these points are represented compactly as complex exponentials using the Euler equation. foreign formula the final DFT Matrix is composed of complex exponentials where each rule corresponds to sample points of the unit circle at different integer analysis frequencies. One of the most mind-blowing facts about this DFT Matrix is defining it as complex exponentials regularly sampled from the DFT matrix. unit circle It is actually an orthogonal matrix, as a result this matrix ends up satisfying all the requirements we were looking for when we started this journey, so taking a step back, the DFT is fundamentally just a vector product matrix of a signal in the domain of time with the DFT matrix.

When you multiply this Matrix with your signal in the time domain, you get a sequence of complex numbers, the magnitude of each of these complex numbers tells you the contribution of that respective analysis frequency throughout the original signal, the angle of the complex coordinate is a measure of the phase of the signal, the best way to see this is without previously failing in the test case, when we perform the DFT transform and plot the magnitude of the frequency components, we can see that the magnitudes of the peaks don't change as the signal phase changes, but what is it?

Really cool is the angle of these corresponding peaks when plotted on a complex plane, they correspond exactly to the phase of the signal at that frequency, how DFT uniquely captures the frequency and phase contributions in a signal. Earlier we briefly talked about how there are two peaks in the full spectrum of a cosine wave when we use our false transformations, true DFT also has this property for real-valued cosine waves, but now the interpretation is a little different DFT decomposes a signal into a set of complex exponentials the first peak corresponds to a complex exponential that has an underlying frequency that matches the frequency of the original cosine wave, while the second peak corresponds to an exponentialcomplex that has an underlying frequency that moves in the opposite direction and perfectly cancels the imaginary component of the first complex exponential and this will always be the In the case of all real-valued signals, this symmetry is required to cancel the imaginary components and get a real signal.

A final example that is also useful for gaining intuition about what is happening in DFT is to notice how as we change the frequencies of our test signal when the test signal matches one of our analysis frequencies in our DFT Matrix, the values of the respective analysis frequencies are triggered while all other frequencies are centered around zero. This is another way to visualize how our previous similarity measure now materializes on a complex plane from a From an implementation perspective, the DFT is fundamentally just the Matrix Vector product and the inverse DFT to recover the original signal is also a product matrix with the inverse DFT matrix multiplied by a frequency domain representation.

Most of you could probably implement this Matrix Vector product yourselves, but in practice, the true DFT is calculated using an

algorithm

called the fast Fourier transform. I have a whole video on this really elegant algorithm that I actually approached from a completely different perspective. Part of the reason I wanted to make this video is because there are so many great things. comments from the previous video about wanting to understand DFT in a signal processing context and I hope this video delivers on that, so even though DFT has a bad reputation for being quite a complex word game, in the end of the day when going through a It's a kind of playful process of trying out different ideas even with a lot of help.I hope you come away from this video feeling that there is a logical reason why it is defined the way it is defined most of the time in computer science. With so many layers of abstraction you're working with, for example, you can TFT using a single call to an fft function and somehow get away with not really understanding what's going on under the hood, and honestly, that's completely fine, abstraction is very important in terms of just being able to focus on what you need to achieve, but I think removing the layers of abstraction behind how some of these concepts work contains some of the most beautiful ideas in computer science.

Thanks for watching and see you next time. one

If you have any copyright issue, please Contact